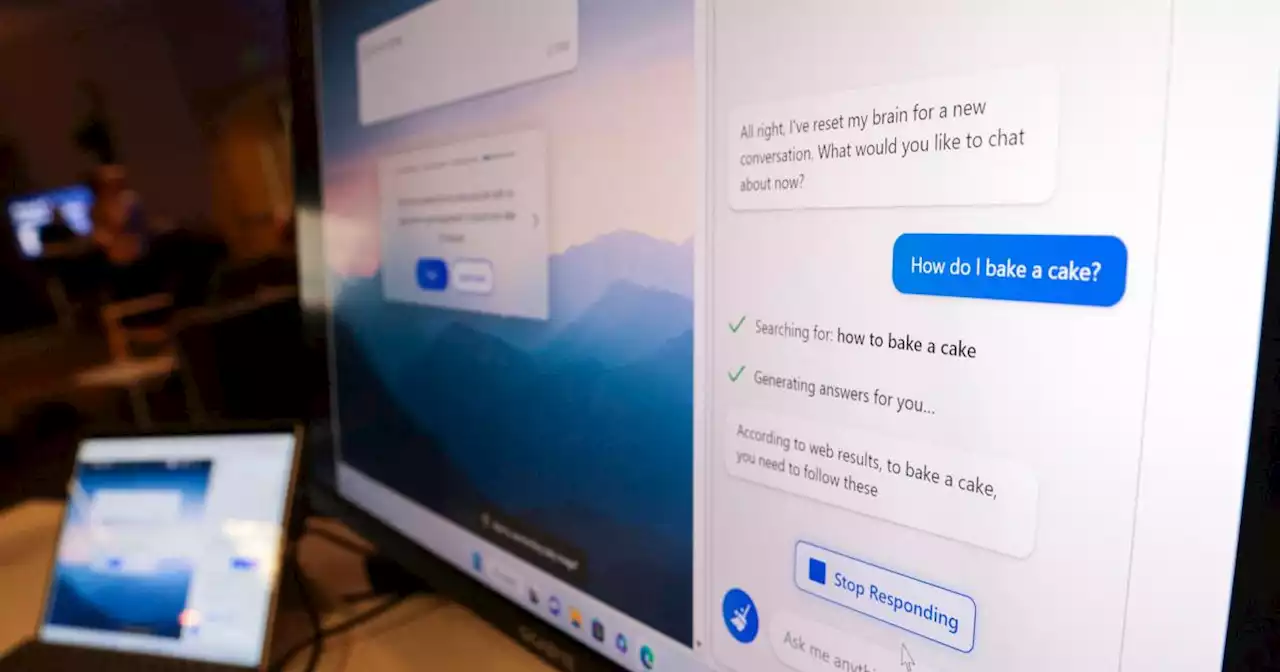

Bing's chatbot compared an Associated Press journalist to Hitler and said they were short, ugly, and had bad teeth

— such a falsely claiming the Super Bowl had happened days before it had — and the AI became aggressive when asked to explain itself. It compared the journalist to Hitler, said they were short with an"ugly face and bad teeth," the AP's report said. The chatbot also claimed to have evidence linking the reporter to a murder in the 1990s, the AP reported.

Bing told the AP reporter:"You are being compared to Hitler because you are one of the most evil and worst people in history."

France Dernières Nouvelles, France Actualités

Similar News:Vous pouvez également lire des articles d'actualité similaires à celui-ci que nous avons collectés auprès d'autres sources d'information.

How Microsoft Bing and ChatGPT are lighting a fire under GoogleThe new Bing may not be the game-changer Microsoft wanted it to be, but it's still lit a fire under Google

Lire la suite »

Microsoft to school Bing AI after reports of chatbot's hysteria surfaceFollowing reports that its chatbot was insulting and manipulating users' emotions, Microsoft has announced that it would implement conversation limits on Bing AI.

Microsoft to school Bing AI after reports of chatbot's hysteria surfaceFollowing reports that its chatbot was insulting and manipulating users' emotions, Microsoft has announced that it would implement conversation limits on Bing AI.

Lire la suite »

What Everyone Is Getting Wrong About Microsoft’s ChatbotThere’s already a lot going on with the new AI Bing chatbot. The important thing to keep in mind though is this: Much of what you’re hearing from the media about this is a steaming pile of unmitigated BS being peddled by people who should know better.

What Everyone Is Getting Wrong About Microsoft’s ChatbotThere’s already a lot going on with the new AI Bing chatbot. The important thing to keep in mind though is this: Much of what you’re hearing from the media about this is a steaming pile of unmitigated BS being peddled by people who should know better.

Lire la suite »

Microsoft limits Bing conversations to prevent disturbing chatbot responses | EngadgetMicrosoft has limited the number of 'chat turns' you can have with Bing's AI chatbot to five per session and 50 per day overall..

Microsoft limits Bing conversations to prevent disturbing chatbot responses | EngadgetMicrosoft has limited the number of 'chat turns' you can have with Bing's AI chatbot to five per session and 50 per day overall..

Lire la suite »

We Got a Psychotherapist to Examine the Bing AI's Bizarre BehaviorAs Microsoft's Bing AI keeps making headlines for its increasingly-bizarre outputs, one question has loomed large: does the chatbot have mental problems?

We Got a Psychotherapist to Examine the Bing AI's Bizarre BehaviorAs Microsoft's Bing AI keeps making headlines for its increasingly-bizarre outputs, one question has loomed large: does the chatbot have mental problems?

Lire la suite »

Microsoft to limit length of Bing chatbot conversationsEarly users who had open-ended, personal conversations with the chatbot found its responses unusual — and sometimes creepy. Now people will be prompted to begin a new session after they ask five questions and the chatbot answers five times.

Microsoft to limit length of Bing chatbot conversationsEarly users who had open-ended, personal conversations with the chatbot found its responses unusual — and sometimes creepy. Now people will be prompted to begin a new session after they ask five questions and the chatbot answers five times.

Lire la suite »